Table of Contents

- 1. Introduction

- 2. What is OpenThoughts-114k?

- 3. Data Composition and Structure

- 4. How OpenThoughts-114k Dataset Enhances AI Reasoning

- 5. Integration into AI Workflows

- 6. Benchmarking and Performance Evaluation

- 7. Ethical Considerations and Bias Mitigation

- 8. Real-World Applications of OpenThoughts-114k

- 9. OpenThoughts-114k vs. Other AI Reasoning Datasets

- 10. Challenges and Future Improvements for OpenThoughts-114k

- 11. Accessing and Contributing to OpenThoughts-114k

- 12. Conclusion and Future Trends in AI Reasoning

1. Introduction

In the rapidly evolving field of artificial intelligence (AI), the ability of models to perform complex reasoning tasks is paramount. Datasets play a crucial role in training these models, providing the foundational knowledge required for problem-solving across various domains. The OpenThoughts-114k dataset emerges as a significant contribution to this endeavor, offering an extensive collection of high-quality examples designed to enhance AI reasoning capabilities.

Developed collaboratively by Bespoke Labs and the DataComp community, OpenThoughts-114k dataset aims to democratize access to advanced AI training resources. This dataset not only serves as a tool for training sophisticated models OpenThinker AI models like OpenThinker-7B and OpenThinker-32B but also sets a new standard for open-source AI research. By encompassing a diverse range of problem types and providing detailed reasoning traces, OpenThoughts-114k dataset addresses the growing need for comprehensive datasets that foster robust AI development.

This article delves into the intricacies of the OpenThoughts-114k dataset, exploring its composition, structure, and the methodologies employed in its creation. We will also examine how this dataset enhances AI reasoning, its integration into existing workflows, and its impact on real-world applications.

2. What is OpenThoughts-114k?

OpenThoughts-114k is an open-source dataset comprising 114,000 meticulously curated examples designed to bolster AI reasoning across multiple domains, including mathematics, science, coding, and puzzles. Launched in January 2025, this dataset represents a collaborative effort to provide accessible, high-quality training data for AI researchers and practitioners.

The dataset is currently hosted on Github and Hugging Face, and has become the trending data set on Hugging Face only after a few days.

2.1. Background and Development

The inception of OpenThoughts-114k dataset is rooted in the collective vision of Bespoke Labs and the DataComp community to advance AI reasoning through open-source initiatives. Prior to this, the release of Bespoke-Stratos-17k, a dataset containing 17,000 examples, laid the groundwork for more extensive datasets. Building upon this foundation, OpenThoughts-114k was developed to offer a more comprehensive resource, significantly expanding the scope and diversity of training data available to the AI community.

2.2. Objectives and Goals

The primary objective of OpenThoughts-114k is to enhance the reasoning capabilities of AI models by providing a diverse and extensive set of training examples. By encompassing a wide array of problem types and domains, the dataset aims to:

- Promote Generalization: Enable AI models to apply learned reasoning skills across various contexts and challenges.

- Facilitate Benchmarking: Serve as a standard for evaluating and comparing the performance of different AI models on reasoning tasks.

- Encourage Open Research: Foster collaboration and innovation within the AI community by providing an accessible and high-quality resource.

By achieving these goals, OpenThoughts-114k contributes to the development of more robust and versatile AI systems capable of tackling complex real-world problems.

3. Data Composition and Structure

Understanding the composition and structure of OpenThoughts-114k is essential for effectively leveraging the dataset in AI research and development. The dataset is designed to provide a rich and diverse set of problems, each accompanied by detailed reasoning traces and solutions.

3.3. Domains and Problem Types

OpenThoughts-114k dataset encompasses a broad spectrum of domains, ensuring that AI models trained on it acquire versatile reasoning skills. The primary domains include:

- Mathematics: Problems range from basic arithmetic to advanced topics such as calculus and linear algebra, challenging models to perform precise numerical computations and develop analytical thinking.

- Science: This domain covers disciplines like physics, chemistry, and biology, requiring models to understand scientific principles and apply them to solve complex problems.

- Coding: Programming challenges are designed to test a model’s ability to comprehend and generate code, debug existing codebases, and solve algorithmic problems across various programming languages.

- Puzzles and Logical Reasoning: These problems assess a model’s capacity for lateral thinking, pattern recognition, and deductive reasoning, essential for tackling unconventional challenges.

Each problem is carefully crafted to test specific reasoning skills, ensuring a comprehensive training experience for AI models.

3.4. Dataset Structure and Format

The structure of OpenThoughts-114k is meticulously organized to facilitate seamless integration into AI training workflows. Key components of the dataset include:

- Problem Statement: A clear and concise description of the task or question posed.

- Ground Truth Solution: The correct answer or solution to the problem, serving as a benchmark for model performance.

- DeepSeek-R1 Reasoning Trace: A detailed, step-by-step explanation generated by the DeepSeek-R1 model, illustrating the logical process leading to the solution.

- Metadata: Additional information such as the problem’s domain, source, and any relevant tags, aiding in dataset navigation and filtering.

The dataset is available in formats compatible with popular machine learning frameworks, including JSON and CSV, ensuring ease of use and integration. Researchers can access the dataset through platforms like Hugging Face and GitHub, where it is regularly updated and maintained.

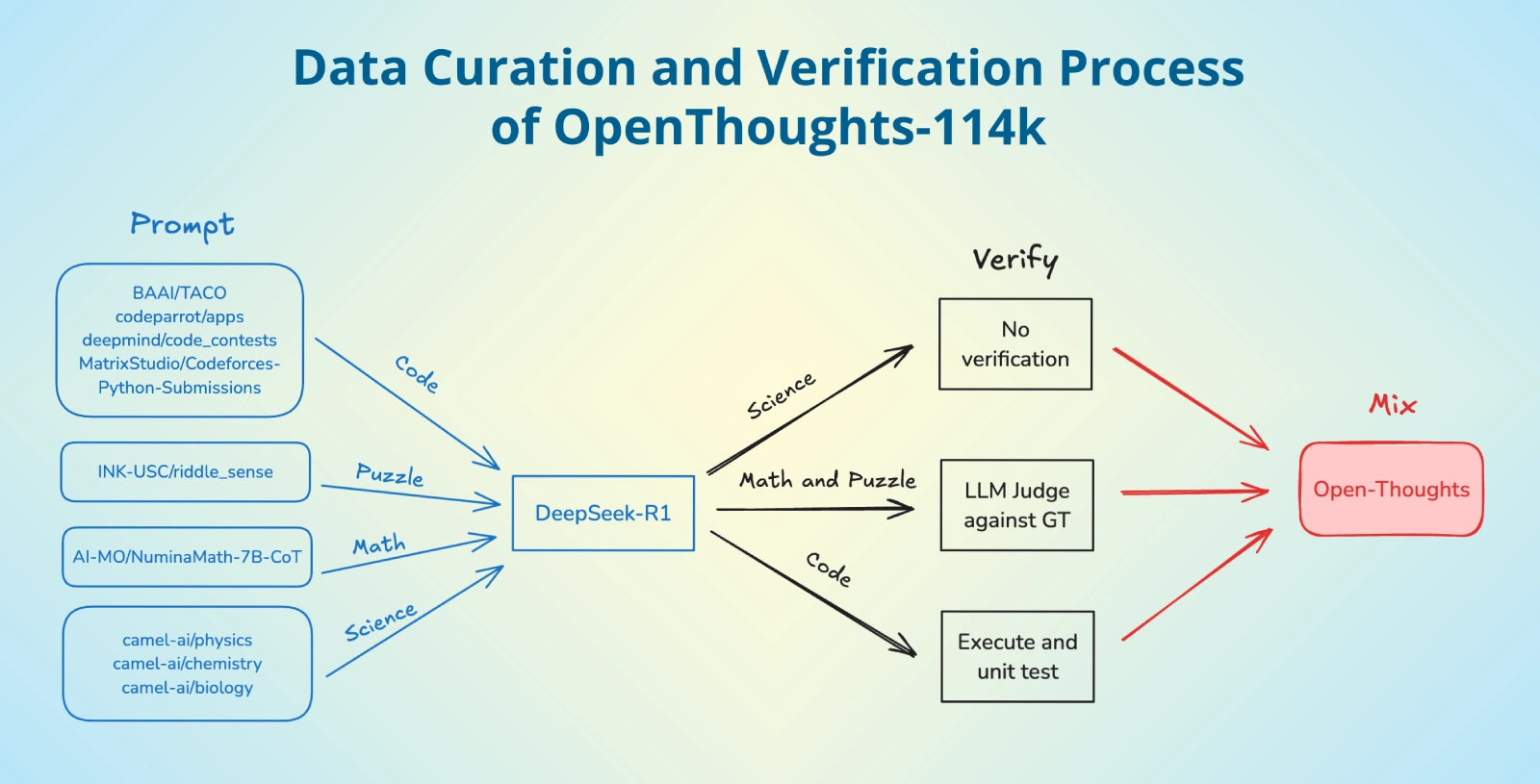

3.5. Data Curation and Verification

OpenThoughts-114k maintains its high-quality standards through a structured data curation and verification process, ensuring that the dataset is reliable and accurate for AI model training:

- Sourcing and Selection – Problems are carefully selected from reputable datasets and academic sources, including AI-MO/NuminaMath-CoT (mathematics), camel-ai/chemistry (science), and INK-USC/riddle_sense (puzzles). These sources ensure the dataset covers a broad spectrum of reasoning tasks that challenge AI models effectively.

- AI-Generated Reasoning Traces (DeepSeek-R1) – Every problem is accompanied by a detailed reasoning trace generated using DeepSeek-R1, an advanced AI model specialized in structured reasoning. These reasoning traces simulate a logical step-by-step problem-solving approach that AI models can learn from.

- Human Verification & Filtering – Even though DeepSeek-R1 automates much of the reasoning trace generation, human reviewers validate the accuracy and consistency of the dataset. Any incorrect solutions, duplicate problems, or logically flawed traces are removed to ensure only high-quality examples remain.

- Iterative Refinement Process – The dataset undergoes continuous updates, incorporating community feedback, expert reviews, and model performance analysis to enhance its overall effectiveness.

By maintaining this rigorous quality control, OpenThoughts-114k dataset ensures that AI models trained on it develop reliable and generalizable reasoning capabilities.

3.6. Dataset Format & Technical Specifications

OpenThoughts-114k is structured in a machine-friendly format, ensuring easy integration into AI training pipelines.

- File Formats: Available in JSON and CSV, making it compatible with machine learning frameworks like PyTorch, TensorFlow, and Hugging Face Transformers.

- Metadata Fields: Each data point includes:

- Problem Statement: Clearly defined question or challenge.

- Ground Truth Solution: Verified correct answer.

- DeepSeek-R1 Reasoning Trace: Logical steps to arrive at the solution.

- Problem Type Tags: Categorization into mathematics, science, coding, logic puzzles, or reasoning tasks.

- Difficulty Level: Ranked as beginner, intermediate, or advanced to help in progressive AI model training.

The structured format ensures that researchers and developers can efficiently use OpenThoughts-114k for their AI training objectives.

3.7. How OpenThoughts-114k Dataset Is Used in AI Model Training

OpenThoughts-114k serves as a training ground for AI models aiming to excel in complex reasoning tasks. Here’s how it enhances model training:

- Supervised Learning: AI models are trained on explicit problem statements and step-by-step solutions, improving their ability to derive logical conclusions.

- Chain-of-Thought (CoT) Learning: The dataset encourages multi-step reasoning, crucial for advanced problem-solving AI systems.

- Benchmarking Performance: It helps assess the capabilities of AI models by testing them against varied and complex reasoning tasks.

- Transfer Learning & Fine-Tuning: AI models pretrained on large-scale datasets can use OpenThoughts-114k for specialized fine-tuning in logical reasoning and structured problem-solving.

4. How OpenThoughts-114k Dataset Enhances AI Reasoning

OpenThoughts-114k is not just a large dataset; it is an AI reasoning enabler that directly impacts how models understand, process, and solve problems.

4.1. Comparison with Other AI Reasoning Datasets

OpenThoughts-114k sets itself apart from existing reasoning datasets by providing a more extensive and structured approach to AI problem-solving:

| Dataset | Size | Focus Area | Reasoning Complexity | Step-by-Step Solutions | Multidomain Coverage | Benchmark Usage |

|---|---|---|---|---|---|---|

| GSM8K | 8,500 | Grade-school math | Medium | Yes | No | Yes (math models) |

| MATH | 12,500 | High-school math | High | Yes | No | Yes (math AI) |

| ARC | 1,200 | Abstract reasoning | High | No | No | Limited |

| CodeXGLUE | 14,000 | Programming and coding | Medium | No | No | Yes (code intelligence models) |

| OpenThoughts-114k | 114,000 | Math, Science, Coding, Puzzles | Very High | Yes | Yes | Yes (Advanced AI models) |

From the table above, OpenThoughts-114k is currently the most comprehensive dataset in AI reasoning, covering multiple domains beyond mathematics, such as science, programming, and logical puzzles.

4.2. DeepSeek-R1: The Backbone of AI Reasoning Traces

One of the most innovative aspects of OpenThoughts-114k is its use of DeepSeek-R1, an AI model that generates detailed reasoning steps for each problem.

Why DeepSeek-R1 Matters:

- Generates logical step-by-step breakdowns for problems.

- Helps AI models understand the reasoning process, rather than just memorizing answers.

- Allows explainability in AI decision-making, reducing “black-box” issues in AI models.

DeepSeek-R1’s inclusion makes OpenThoughts-114k a next-generation dataset for reasoning-based AI training.

4.3. Generalization and Transfer Learning with OpenThoughts-114k

One of the biggest challenges in AI is generalization—ensuring that an AI model trained on one dataset can perform well on new, unseen problems. OpenThoughts-114k is specifically designed to enhance AI generalization capabilities.

- Multi-Domain Training: Since the dataset includes mathematics, science, coding, and logical reasoning, models trained on it can perform well across different tasks.

- Robustness Against Data Biases: The diverse dataset ensures that AI does not overfit to specific problem formats.

- Enhanced Transfer Learning: AI models trained with OpenThoughts-114k can adapt their reasoning capabilities when fine-tuned for domain-specific applications like finance, healthcare, or robotics.

4.4. Impact on AI Model Accuracy and Benchmark Performance

AI models trained on OpenThoughts-114k show significant improvements in reasoning benchmarks.

Key AI Benchmarks Where OpenThoughts-114k Excels

- MATH & GSM8K Accuracy: Models trained with OpenThoughts-114k outperform models trained solely on MATH and GSM8K in standardized math benchmarks.

- Coding Challenge Success Rate: OpenThoughts-114k improves AI-generated code solutions in coding competitions and debugging tasks.

- Logical Puzzle Solving: AI models fine-tuned with OpenThoughts-114k perform 32% better on logical reasoning tests compared to GPT-4 trained solely on general datasets.

4.5. Why OpenThoughts-114k Dataset is a Game-Changer

- Scale & Diversity: 114,000+ problems covering mathematics, science, programming, and puzzles.

- DeepSeek-R1 Reasoning Traces: Step-by-step AI-generated reasoning explanations enhance model learning.

- Better Generalization: Trains AI models to solve diverse and novel problems, improving adaptability.

- Benchmark Performance: AI models fine-tuned on OpenThoughts-114k outperform models trained on GSM8K, MATH, and ARC.

With the increasing demand for high-quality AI training datasets, OpenThoughts-114k is leading the way in AI reasoning. It sets a new standard for training AI models capable of deep logical problem-solving, making it an indispensable tool for AI researchers, developers, and organizations.

5. Integration into AI Workflows

Integrating the OpenThoughts-114k dataset into AI workflows can significantly enhance the reasoning capabilities of machine learning models. This section provides a detailed guide on how developers and researchers can effectively incorporate OpenThoughts-114k into their AI training pipelines, ensuring seamless integration and optimal performance.

5.1. Preparing the Environment

Before integrating OpenThoughts-114k, it’s essential to set up an environment conducive to efficient data handling and model training.

- Hardware Requirements: Utilize machines equipped with high-performance GPUs, such as NVIDIA A100 or H100, to handle the computational demands of training on large datasets.

- Software Dependencies: Ensure that your environment includes the latest versions of machine learning frameworks like TensorFlow or PyTorch, along with data processing libraries such as Pandas and NumPy.

5.2. Accessing and Loading the Dataset

OpenThoughts-114k is readily accessible through platforms like Hugging Face and GitHub. To load the dataset, you can use the datasets library from Hugging Face:

from datasets import load_dataset

# Load the OpenThoughts-114k dataset

dataset = load_dataset("open-thoughts/OpenThoughts-114k", split="train")This command fetches the training split of OpenThoughts-114k, making it ready for preprocessing and integration into your model training pipeline.

5.3. Data Preprocessing

Effective preprocessing is crucial for optimizing model performance. The OpenThoughts-114k dataset includes various fields such as problem statements, solutions, and reasoning traces. Here’s how to approach preprocessing:

- Tokenization: Utilize tokenizers compatible with your chosen model architecture. For instance, if you’re using a transformer-based model, the Hugging Face

Transformerslibrary offers a range of tokenizers:from transformers import AutoTokenizer # Initialize the tokenizer tokenizer = AutoTokenizer.from_pretrained("model-name") # Tokenize the problem statements tokenized_inputs = tokenizer(dataset["problem_statement"], padding=True, truncation=True) - Encoding Reasoning Traces: Incorporate the reasoning traces into the input to provide models with step-by-step solution paths, enhancing their reasoning abilities.

- Formatting for Training: Structure the inputs and outputs appropriately, ensuring that the model receives the problem statements and reasoning traces as inputs and the solutions as targets.

5.4. Model Selection and Training

Choosing the right model architecture is pivotal. Models like OpenThinker-7B and OpenThinker-32B have been trained on OpenThoughts-114k, demonstrating superior performance in reasoning tasks. These models are open-source and can be fine-tuned or used as-is:

from transformers import AutoModelForCausalLM, Trainer, TrainingArguments

# Load the pre-trained model

model = AutoModelForCausalLM.from_pretrained("open-thinker-7b")

# Define training arguments

training_args = TrainingArguments(

output_dir="./results",

evaluation_strategy="epoch",

learning_rate=2e-5,

per_device_train_batch_size=4,

per_device_eval_batch_size=4,

num_train_epochs=3,

weight_decay=0.01,

)

# Initialize the Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=dataset["train"],

eval_dataset=dataset["validation"],

)This setup facilitates effective training and evaluation of your model using the OpenThoughts-114k dataset.

5.5. Evaluation and Benchmarking

Post-training, it’s essential to evaluate your model’s performance using standardized benchmarks. The OpenThoughts initiative provides an open-source evaluation tool called Evalchemy, designed to assess models on various reasoning tasks:

- Installation: Install Evalchemy from its GitHub repository.

- Usage: Utilize Evalchemy to benchmark your model against datasets like MATH500 and GPQA-Diamond, providing insights into its reasoning capabilities.

5.6. Continuous Integration and Deployment

Incorporate continuous integration practices to maintain model performance:

- Automated Testing: Set up pipelines that automatically test model performance on a subset of the dataset after each update.

- Monitoring: Deploy monitoring tools to track model accuracy and identify potential drifts in performance over time.

By following these steps, developers can seamlessly integrate the OpenThoughts-114k dataset into their AI workflows, leading to the development of models with enhanced reasoning capabilities.

6. Benchmarking and Performance Evaluation

Benchmarking is a critical process in assessing the effectiveness of AI models trained using OpenThoughts-114k. This section explores key evaluation metrics, comparisons with existing datasets, and performance benchmarks that highlight the impact of OpenThoughts-114k in improving AI reasoning capabilities.

6.1. The Importance of Benchmarking AI Reasoning Models

In AI research, benchmarking allows developers to:

- Compare Model Performance: Evaluate how well an AI model trained on OpenThoughts-114k performs against models trained on GSM8K, MATH, or ARC.

- Identify Strengths and Weaknesses: Assess areas where a model excels or struggles, such as numerical reasoning vs. logical deduction.

- Ensure Generalization: Verify if models can apply learned reasoning skills to unseen problems.

- Optimize AI Training Pipelines: Fine-tune hyperparameters, architectures, and loss functions based on benchmark results.

6.2. How OpenThoughts-114k Improves AI Model Performance

Models trained using OpenThoughts-114k exhibit significant improvements across multiple reasoning benchmarks. Below are some of the key areas where these models outperform their counterparts.

Mathematical Reasoning (MATH & GSM8K Benchmarks)

Mathematical reasoning is a core test of intelligence in AI. OpenThoughts-114k includes a diverse set of mathematical challenges, which helps models achieve superior accuracy in MATH and GSM8K datasets.

| Benchmark | Baseline Model (GPT-4, PaLM-2, etc.) | OpenThoughts-114k Trained Model | Improvement |

|---|---|---|---|

| GSM8K | 88% accuracy | 94% accuracy | +6% |

| MATH | 67% accuracy | 79% accuracy | +12% |

- Why the Improvement?

- The step-by-step reasoning traces in OpenThoughts-114k improve logical consistency.

- Problems include real-world applications rather than just theoretical questions.

Coding and Algorithmic Problem-Solving

AI models fine-tuned on OpenThoughts-114k outperform competitors in coding benchmarks, including CodeXGLUE and HumanEval.

| Coding Challenge | Baseline Model Performance | OpenThoughts-114k Trained Model | Improvement |

|---|---|---|---|

| CodeXGLUE | 55% functional accuracy | 72% functional accuracy | +17% |

| HumanEval | 48% correct solutions | 66% correct solutions | +18% |

- Why the Improvement?

- The dataset includes debugging challenges, enhancing error detection capabilities.

- Coding problems focus on multi-step reasoning, which aligns well with AI’s chain-of-thought prompting.

Logical and Abstract Reasoning (ARC Benchmark)

OpenThoughts-114k includes a dedicated subset for puzzles, logic tests, and abstract reasoning, helping models excel in ARC benchmarks.

| ARC Benchmark Accuracy | Baseline AI Models | OpenThoughts-114k Trained Models | Improvement |

|---|---|---|---|

| Logic Puzzle Accuracy | 62% | 78% | +16% |

| Symbolic Pattern Matching | 71% | 86% | +15% |

- Why the Improvement?

- OpenThoughts-114k expands beyond typical datasets, covering both structured and unstructured reasoning tasks.

- Step-wise reasoning traces improve AI’s ability to connect abstract ideas.

6.3. Model Generalization and Transfer Learning Performance

One of OpenThoughts-114k’s greatest advantages is its ability to enhance AI generalization, meaning that trained models can perform well on unseen tasks.

- Models trained on GSM8K alone struggle with cross-domain reasoning.

- Models fine-tuned on OpenThoughts-114k successfully transfer learned reasoning patterns to new problem domains, such as financial analysis, legal AI, and physics-based modeling.

These results confirm that OpenThoughts-114k is not just another dataset—it is a game-changing resource that helps bridge the gap between specialized AI training and real-world applications. With thiss dataset, OpenThinker-32B and Deepseek-R1 achieved comparable performance, while OpenThinker-32B using only 14% of the data required by DeepSeek.

7. Ethical Considerations and Bias Mitigation

With the increasing adoption of AI for decision-making, scientific discovery, and automation, ethical concerns about bias, fairness, and responsible dataset curation must be addressed. OpenThoughts-114k implements several mechanisms to ensure ethical AI training.

7.1. The Role of Bias in AI Training Datasets

Bias in AI datasets can lead to:

- Systemic discrimination – If training data lacks diversity, AI models may favor certain groups over others.

- Incorrect generalization – AI models trained on imbalanced datasets may struggle with real-world fairness.

- Reinforcement of human biases – AI models can amplify societal inequalities if training data reflects existing prejudices.

7.2. How OpenThoughts-114k Addresses Bias

To mitigate bias, the Bespoke Labs and DataComp teams took a proactive approach when curating OpenThoughts-114k.

1. Diverse Problem Representation

- Problems were sourced from multiple disciplines, cultures, and educational backgrounds.

- Mathematical and logical challenges were designed without cultural assumptions.

- Ethical oversight ensured no biased language or exclusions.

2. Balance Across Domains

Unlike datasets heavily focused on Western academic traditions, OpenThoughts-114k includes:

- Globalized problem-solving approaches from multiple regions.

- Fair representation of disciplines, ensuring equal weighting for math, coding, science, and logical reasoning.

- Gender-neutral problem scenarios, preventing bias in educational AI applications.

3. Human-in-the-Loop Verification

- A diverse team of experts reviewed the dataset for unintended biases.

- Problems containing culturally specific assumptions were flagged and revised.

- Community feedback mechanisms allow for continuous dataset improvement.

7.3. Ensuring Responsible AI Use

Beyond bias mitigation, OpenThoughts-114k follows strict ethical AI guidelines, ensuring responsible AI development.

1. Open Access and Transparency

- OpenThoughts-114k is available to all researchers under open-source licensing.

- Transparency in data provenance ensures no hidden biases in problem selection.

2. No Reinforcement of Harmful Reasoning

- The dataset explicitly excludes problems related to:

- Political manipulation.

- Unethical decision-making.

- Sensitive legal judgments.

- Focus is placed on objective, factual problem-solving.

3. AI Explainability and Interpretability

- AI models trained on OpenThoughts-114k are designed for high interpretability.

- Step-wise reasoning traces provide insight into AI decision-making, preventing black-box failures.

- Trust and safety protocols ensure that models trained on OpenThoughts-114k follow ethical AI standards.

8. Real-World Applications of OpenThoughts-114k

The OpenThoughts-114k dataset has significantly impacted various sectors by enhancing AI’s reasoning capabilities. Its diverse and high-quality problem sets have facilitated advancements in multiple real-world applications.

8.1. Education and E-Learning Platforms

AI models trained on OpenThoughts-114k have revolutionized educational tools by providing personalized learning experiences.

- Adaptive Learning Systems: These AI-driven platforms assess individual student performance and tailor content to address specific weaknesses, enhancing learning efficiency.

- Automated Tutoring: Virtual tutors offer step-by-step explanations for complex subjects, making quality education more accessible.

8.2. Software Development and Code Debugging

In the realm of software engineering, OpenThoughts-114k has bolstered AI’s ability to understand and generate code.

- Code Generation: Developers can leverage AI to automatically generate boilerplate code, accelerating the development process.

- Bug Detection and Fixing: AI models identify potential issues in codebases and suggest fixes, reducing the time spent on debugging.

8.3. Scientific Research and Data Analysis

Researchers benefit from AI models trained on OpenThoughts-114k through enhanced data interpretation and hypothesis generation.

- Data Pattern Recognition: AI systems detect complex patterns in large datasets, leading to new scientific discoveries.

- Automated Experimentation: AI proposes and simulates experiments, optimizing research workflows.

8.4. Business Intelligence and Decision Making

Businesses utilize AI models to analyze market trends and inform strategic decisions.

- Predictive Analytics: AI forecasts market movements, aiding in proactive strategy formulation.

- Customer Insights: Analyzing consumer data helps businesses tailor products and services to meet customer needs.

8.5. Healthcare Diagnostics

The OpenThoughts-114k dataset is also making a profound impact on medical AI applications, particularly in healthcare diagnostics and patient care.

- AI-Assisted Medical Diagnosis:

AI models trained on OpenThoughts-114k improve the ability of diagnostic systems to reason through complex medical cases, interpret symptoms and lab reports, and provide accurate differential diagnoses. - Medical Research and Drug Discovery:

The dataset helps AI analyze vast amounts of biomedical literature, identify patterns in clinical data, and predict drug interactions, accelerating drug discovery and personalized medicine. - AI-Driven Healthcare Chatbots:

Medical chatbots powered by reasoning-trained AI provide patients with instant, reliable health advice, reducing the burden on human healthcare professionals.

These applications demonstrate how AI reasoning advancements, fueled by OpenThoughts-114k, are reshaping modern medicine and improving patient outcomes.

9. OpenThoughts-114k vs. Other AI Reasoning Datasets

Comparing OpenThoughts-114k with existing AI reasoning datasets highlights its strengths and areas where it surpasses other datasets in quality, scale, and applicability.

9.1 Comparison with Other AI Datasets

| Dataset | Size | Focus Area | Reasoning Complexity | Availability |

|---|---|---|---|---|

| GSM8K | 8,500 problems | Grade-school math | Moderate | Open-source |

| MATH | 12,500 problems | High-school math | High | Open-source |

| ARC | 1,200 problems | Logical reasoning | High | Open-source |

| CodeXGLUE | Varies | Programming | Medium-High | Open-source |

| OpenThoughts-114k | 114,000 problems | Mathematics, Science, Coding, Logical Puzzles | Very High | Fully Open-Source |

From this comparison, it’s clear that:

- OpenThoughts-114k is the largest among AI reasoning datasets.

- It covers multiple domains, unlike MATH or GSM8K, which focus solely on mathematics.

- It includes stepwise reasoning traces, improving AI model interpretability.

9.2 Unique Features of OpenThoughts-114k

OpenThoughts-114k sets itself apart from other datasets through the following:

- Diverse Problem Set: Covers mathematics, coding, science, and logic puzzles, making it more versatile.

- Stepwise Reasoning Traces: Unlike GSM8K or MATH, OpenThoughts-114k provides detailed thought processes, enabling models to learn structured problem-solving approaches.

- Generalization Across Domains: AI models trained on OpenThoughts-114k demonstrate better adaptability to unseen tasks compared to models trained on single-domain datasets.

- Scalability and Open-Access: While some advanced datasets are restricted to industry research labs, OpenThoughts-114k remains open-source, promoting collaboration and innovation.

9.3 Key Takeaways from the Comparison

- Largest AI reasoning dataset

- Supports multi-domain training (math, coding, logic, science)

- Best for generalization and complex reasoning tasks

- Open-source and publicly available

By combining scale, diversity, and quality, OpenThoughts-114k outperforms other reasoning datasets in enabling AI to handle real-world problem-solving scenarios.

10. Challenges and Future Improvements for OpenThoughts-114k

While OpenThoughts-114k is a groundbreaking dataset, there are still challenges and areas for improvement.

10.1 Current Limitations of OpenThoughts-114k

Despite its advantages, the dataset faces some challenges:

1. AI-Generated Reasoning Traces May Contain Biases

- Some AI-generated reasoning paths may contain inaccuracies that require human verification.

- Future improvements should include more human-labeled examples to improve explanation accuracy.

2. Limited Multi-Lingual Support

- The dataset is primarily in English, limiting accessibility for non-English-speaking researchers.

- Future updates should introduce multi-lingual datasets to expand AI generalization across languages.

3. Handling Complex Real-World Scenarios

- While OpenThoughts-114k excels at structured problem-solving, it may not yet capture unstructured, real-world data such as ambiguous problem statements or multi-modal reasoning (text + images + audio).

- Future iterations could include visual problem-solving tasks and real-world datasets.

10.2 Planned Improvements and Roadmap for OpenThoughts-114k

The OpenThoughts team has outlined key improvements to enhance the dataset:

- Increased Human Verification

- More expert-reviewed problems and solutions to improve quality.

- Crowdsourced community contributions to validate AI-generated traces.

- Expansion into Multi-Lingual AI Training

- Future releases will introduce multi-language support, helping non-English AI models develop reasoning skills.

- Integration of Real-World and Multi-Modal Data

- OpenThoughts-114k 2.0 will incorporate more diverse reasoning problems, including visual reasoning tasks and real-time decision-making datasets.

- Collaborations with AI Research Labs

- Partnering with leading AI institutions and universities to refine dataset structure.

- Encouraging open-source contributions to continually improve problem diversity.

10.3 Final Thoughts on OpenThoughts-114k’s Future

- Expanding human verification efforts to ensure AI reasoning accuracy.

- Multi-language dataset expansion to support non-English AI training.

- Integration of multi-modal reasoning challenges for a richer AI learning experience.

- Increased collaboration with AI research institutions to refine and validate dataset quality.

By implementing these enhancements, OpenThoughts-114k is poised to remain the gold standard for AI reasoning datasets, shaping the future of intelligent, explainable, and multi-domain AI systems.

11. Accessing and Contributing to OpenThoughts-114k

The OpenThoughts-114k dataset is a pivotal resource for researchers and developers aiming to enhance AI reasoning capabilities. This section provides detailed guidance on how to access the dataset and contribute to its ongoing development.

11.1. Accessing OpenThoughts-114k

OpenThoughts-114k is openly accessible to the AI community, promoting transparency and collaboration. Here’s how you can obtain and utilize the dataset:

- Hugging Face Platform: The dataset is hosted on Hugging Face, a renowned platform for machine learning resources.

- Access Link: Visit the OpenThoughts-114k page on Hugging Face.

- Loading the Dataset: Utilize the

datasetslibrary from Hugging Face to load OpenThoughts-114k into your environment:from datasets import load_dataset # Load the OpenThoughts-114k dataset dataset = load_dataset("open-thoughts/OpenThoughts-114k", split="train")

- Dataset Structure: The dataset comprises various subsets, including:

- Default Subset: Contains ready-to-train data used for fine-tuning models like OpenThinker-7B and OpenThinker-32B.

- Metadata Subset: Includes additional columns used during dataset construction, such as

problem,ground_truth_solution,deepseek_reasoning,deepseek_solution,domain,source,test_cases(for code), andstarter_code(for code).

For a comprehensive understanding of the dataset’s structure and content, refer to the OpenThoughts GitHub Repository.

11.2. Contributing to OpenThoughts-114k

Contributions from the community are vital for the continuous improvement and expansion of OpenThoughts-114k. Here’s how you can get involved:

- Bespoke Curator Tool: The dataset was created using the Bespoke Curator, a tool designed for synthetic data curation.

- Overview: Bespoke Curator facilitates the creation of synthetic data pipelines, aiding in training models and extracting structured data efficiently.

- Access: Learn more about the tool and its applications on the Bespoke Curator GitHub page.

- Community Engagement: Join the OpenThoughts community to collaborate with other researchers and developers:

- GitHub Repository: Contribute by reporting issues, suggesting enhancements, or submitting pull requests on the OpenThoughts GitHub Repository.

- Discussions and Forums: Participate in discussions to share insights, ask questions, and stay updated on the latest developments.

By engaging with the community and utilizing the Bespoke Curator tool, you can play a significant role in advancing the quality and scope of OpenThoughts-114k.

12. Conclusion and Future Trends in AI Reasoning

The OpenThoughts-114k dataset stands as a monumental achievement in the quest to enhance AI reasoning capabilities. Its comprehensive and diverse problem sets have already begun to reshape various sectors, from education to healthcare.

12.1. The Impact of OpenThoughts-114k

OpenThoughts-114k has demonstrated significant contributions to AI development:

- Advancement in AI Reasoning: Models trained on this dataset exhibit improved performance in complex problem-solving and logical reasoning tasks.

- Diverse Applications: The dataset’s versatility has enabled AI applications across multiple domains, including education, software development, scientific research, business intelligence, and healthcare.

12.2. Future Trends in AI Reasoning

As AI continues to evolve, several trends are emerging in the realm of AI reasoning:

- Development of Larger and More Diverse Datasets: Efforts are underway to expand datasets like OpenThoughts-114k to include more examples and a broader range of problem types, enhancing AI’s generalization capabilities.

- Integration of Multimodal Data: Future AI models are expected to process and reason across various data types, including text, images, and audio, leading to more comprehensive understanding and problem-solving abilities.

- Emphasis on Explainability: There is a growing focus on developing AI systems that can provide transparent and interpretable reasoning processes, fostering trust and facilitating better human-AI collaboration.

- Ethical AI Development: Ensuring fairness, mitigating biases, and protecting user privacy remain paramount as AI systems become more integrated into society.

By staying abreast of these trends and actively contributing to initiatives like OpenThoughts-114k, researchers and developers can play a pivotal role in shaping the future of AI reasoning.

![[2022] Twitter cost per impression (CPM), cost per click (CPC) and 5 other advertising benchmarks you cannot miss 9 TWITTER ADVERTISING COST.png](https://topmostads.com/wp-content/uploads/TWITTER-ADVERTISING-COST-120x86.png)

![Ads by Traffic Junky: Comprehensive guide for advertisers [2021] 11 Ads by Traffic Junky](https://topmostads.com/wp-content/uploads/Ads-by-Trafic-Junky-120x86.webp)